Can Jin

Ph.D. Candidate in Computer Science, Rutgers University

I am a Ph.D. Candidate in Computer Science at Rutgers University, New Brunswick, advised by Professor Dimitris N. Metaxas. My research interests include Pre-training/Post-training/Inference of Large Foundation Models, Efficient AI, and AI Agents.

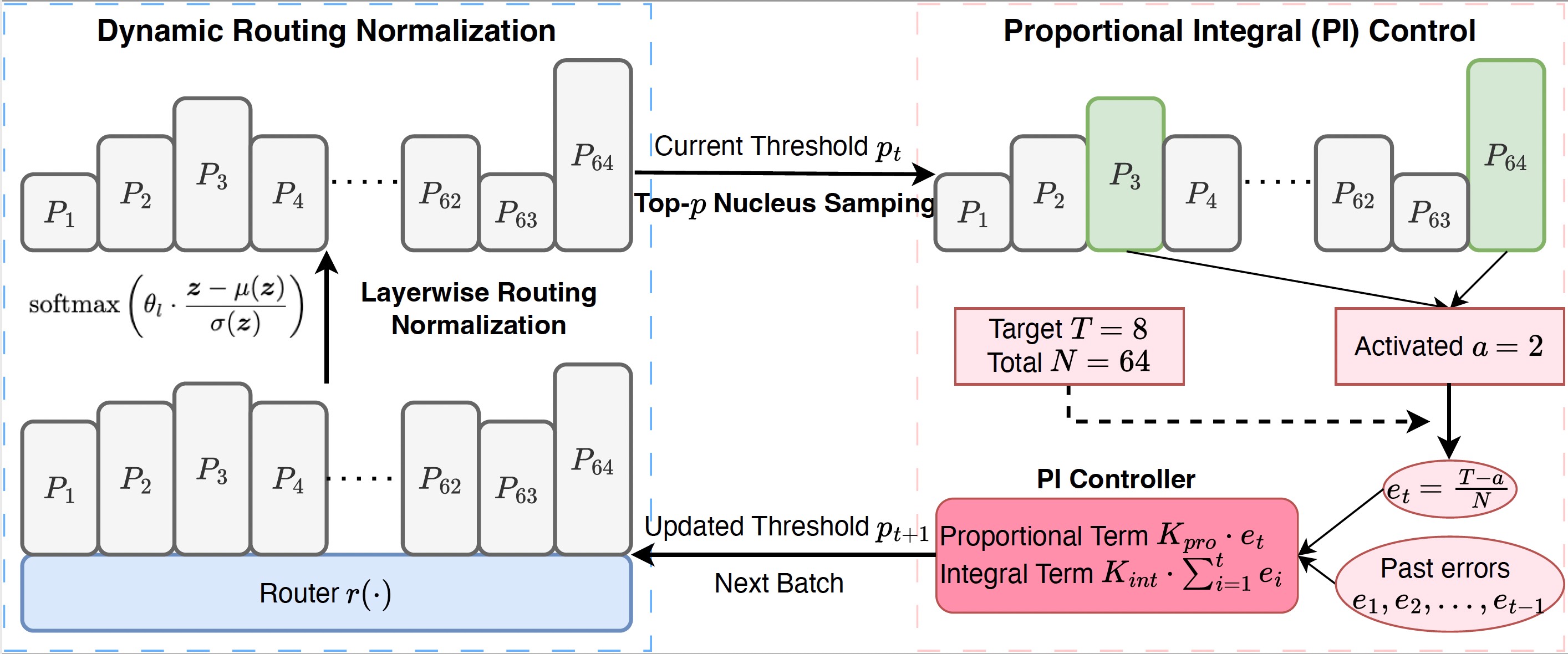

I earned my M.S. and B.S. degrees in Mathematics from the University of Science and Technology of China (USTC). Before my doctoral studies, I worked as a Machine Learning Engineer at Meituan Dianping Corporation, developing forecasting models for logistics and supply chain management. Recently, as a Research Intern at Adobe Research, I focused on the efficient pre-training of large foundation models (LLMs and DiTs) via dynamic routing Mixture-of-Experts (MoE).

I am open to collaboration on related projects. Please feel free to reach out via email if you share similar interests.

I am actively seeking research internship opportunities for Summer 2026, focusing on Pre-training/Post-training/Inference of Large Foundation Models, Efficient AI, and AI Agents. You can find my CV here.

Research

Large Foundation Models Pre-training, Post-training, and Inference

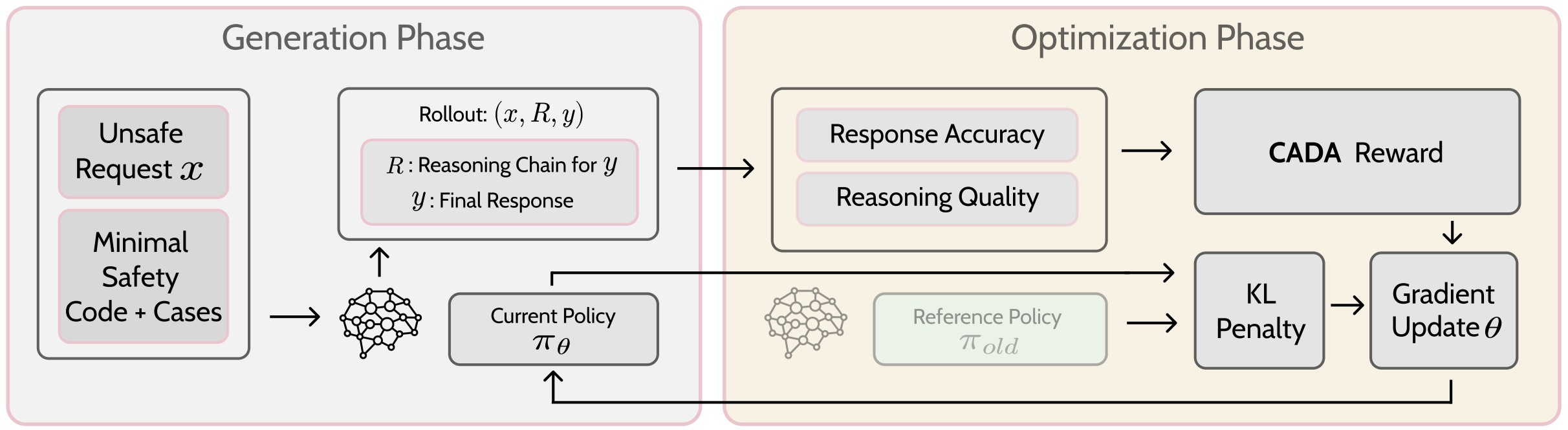

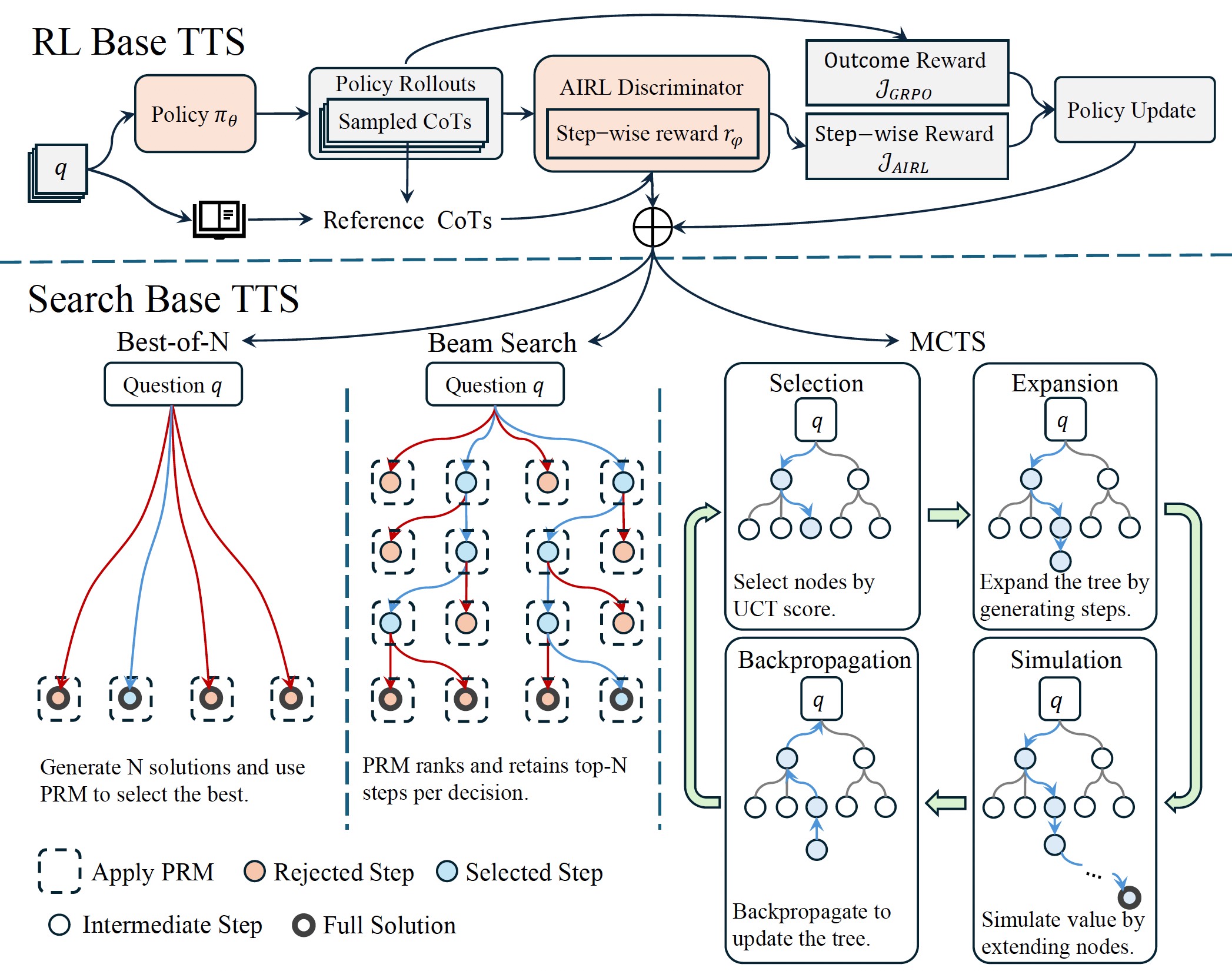

- Focus: Enhancing the effectiveness of pre-training and post-training for generalization and reasoning capabilities.

- Approach: Investigating advanced training techniques including Mixture-of-Experts, supervised fine-tuning, reinforcement learning, and inference-time scaling methods such as self-refinement and tree search.

- Outcomes: NeurIPS 2024, WWW 2025 (RelWeb), arXiv 2025.

Efficient AI

- Focus: Improving the efficiency of models’ training and inference.

- Approach: Exploring methods such as model distillation, pruning, and prompting etc.

- Outcomes: ICLR 2025, ICML 2025, NeurIPS 2025, AAAI 2025, and WWW 2025 (RelWeb).

AI Agents

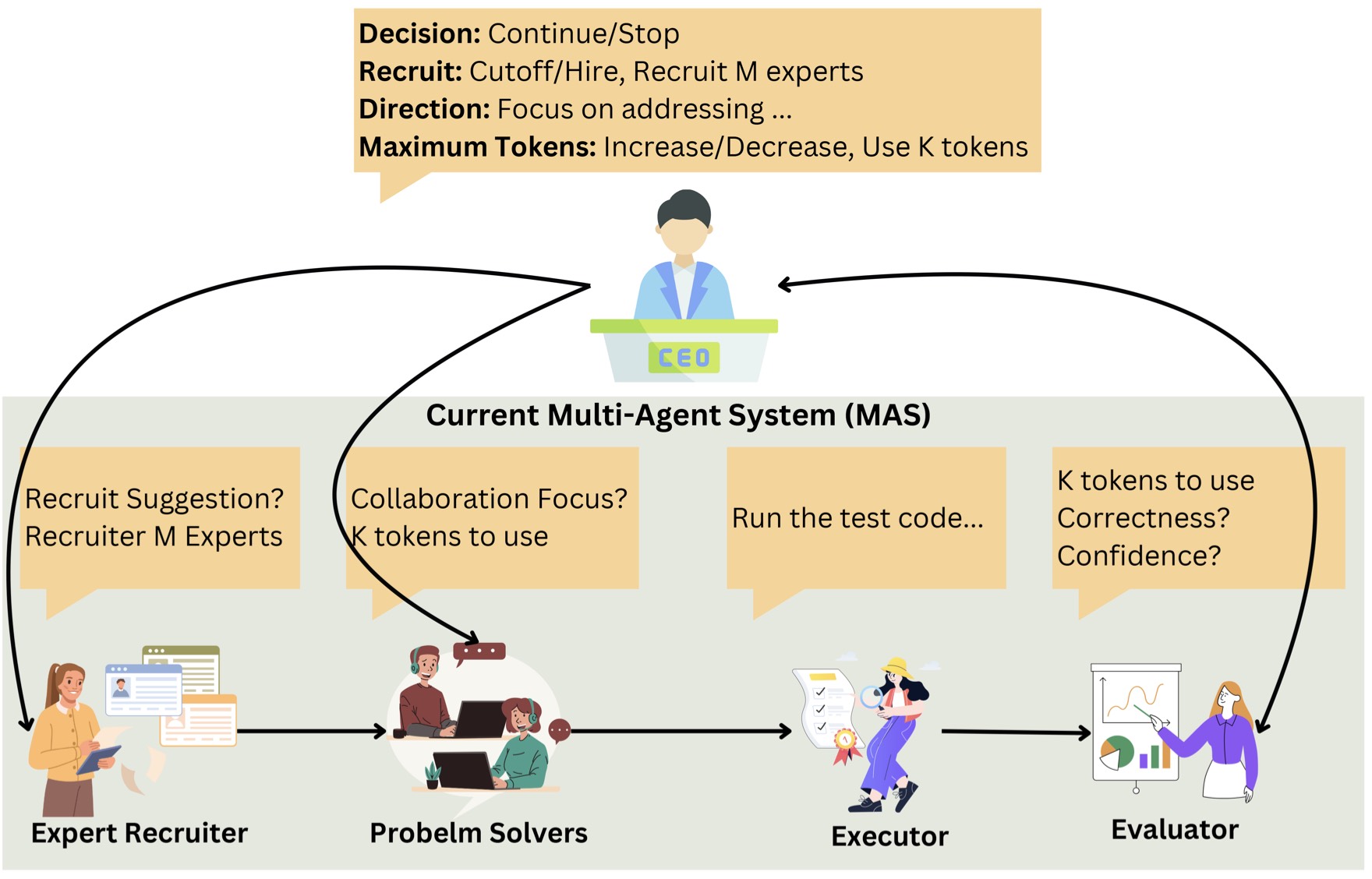

- Focus: Enhancing the performance of AI agents in multi-agent systems.

- Approach: Utilizing reinforcement learning, supervised fine-tuning, and AI data generation pipelines.

- Outcomes: NeurIPS 2025 (SEA), arXiv 2025.

Academic Services

Teaching Assistant

- Rutgers University:

- CS534: Computer Vision (Spring 2025)

- CS210: Data Management for Data Science (Fall 2024)

- CS211: Computer Architecture (Fall 2025)

Peer Review

- Conference: NeurIPS 2025, CVPR 2025/2026, ICLR 2025, AAAI 2026, ICML 2024

- Journal: Alexandria Engineering Journal, Information Fusion, Pattern Recognition, Signal Processing